December 22, 2017

University Rankings: Unavoidable Despite Flaws

The Times Higher Education (THE) World University Rankings are attracting attention. With the release of the THE 2017-2018 rankings last fall, the University of Tokyo, Japan's top university, dropped from 39th to 46th place and headlines lamenting the "worst ever" rankings were splashed across Japanese newspapers. Ten years ago the university ranked 17th worldwide and the top in Asia, but this year it was stuck at sixth place in Asia. The top five positions were taken by universities in Singapore, China, and Hong Kong. Within Japan, Kyoto University ranked next in the world rankings, rising from 91st to 74th place, followed by Osaka University and Tohoku University (both in the 201-250 cohort), the Tokyo Institute of Technology (251-300), and Nagoya University (301-350). Incidentally, ten years ago Nagoya University was sixth in Japan as it still is today, but 112th worldwide. For Japanese universities, these latest rankings were disappointing.

Looking at data on the number of papers published and citations, however, we see that Japanese research activity peaked in 1997 and its international share has dropped ever since. Even voices from overseas have pointed out the decline in public funding for Japanese universities, and they are lagging in terms of internationalization behind not only Western universities but also other Asian universities as well. Considering this situation, perhaps it is not so surprising that Japanese university rankings have declined.

How should these results be interpreted? And ultimately, what are university rankings anyway?

Nagoya University aims to be one of the world's leading research universities.

Nagoya University aims to be one of the world's leading research universities.

First published in 2004 the THE rankings are seen as the most influential. There are two more in the "big three" of international university rankings. One is the World University Rankings by Quacquarelli Symonds (QS) from the UK as well and started the same year, and the other is the Academic Ranking of World Universities (ARWU), launched a year earlier, in 2003, by Shanghai Jiao Tong University. Over the past ten years, these rankings have been covered by mainstream print media and become a subject of discussion in Japan.

Rankings like these attract many voices of criticism and doubt. In " World University Rankings and the Hegemonic Restructuring of Knowledge" (Kyoto University Press), Professor Mayumi Ishikawa of Osaka University points out that these systems, which attempt to quantify and uniformly rank the highly diverse universities of the world using the same measure, "have been criticized for having no meaningful value in suitability and accuracy." She also argues that attempts to "uniformly rank universities using over-simplified indicators cause especially big problems for non-English-speaking countries like Japan."

In his book "A Warning from Oxford" Professor Takehiko Kariya of University of Oxford writes, "We cannot deny the view that Japan has been completely caught up in the marketing strategies of English-speaking countries." He points out that it is the number of Chinese students studying abroad which has been growing rapidly since about the year 2000, even though we refer to this as "the globalization of education". The UK government has made it a top priority policy to attract students from China as a means of bringing in foreign money. University rankings emerged in that context, and the top US and UK schools edged in to claim the top of the list. He strongly warns about the dangers of discussing policy without properly understanding the true nature of the rankings. In 2013 the Japanese government declared a policy target of having at least ten Japanese universities in the top 100 within ten years as part of the national growth strategy, and this is a perfect example of what he was warning about.

The big three ranking systems are actually operated by both sides -the countries receiving and the countries sending students - and in that sense, the objectives of these systems are clear. However, even for other countries, these kinds of ranking may be a yardstick for selecting and being selected. Japanese universities cannot ignore the rankings. That seems to be the reality.

I spoke with Meidai's Academic Research & Industry-Academia-Government Collaboration Center, which analyzes university rankings. In a report summarizing the THE rankings, the Center says that in the past two years the ranking order of Japanese universities did not change significantly. The report adds, however, that it was in 2015 that the rankings of Japanese universities dropped, across the board. As the government had just announced its ambitious targets, people still remember the media's headline news about Japan's university rankings dropping. Much fuss was made of the news that the University of Tokyo was no longer the top-ranked university in Asia and Japanese universities on the top-200 list had dropped from five to only two in number. The report postulates that the reason for the drop is a broad reduction in citation scores due to changes in the data of papers.

The results will change, obviously, if the method of compiling data changes. The publication of the rankings was accompanied by a comment that it would not be appropriate to directly compare the new results with the previous year's results. Nevertheless, direct comparisons with the previous year took on a life of their own.

So how exactly did the data on published papers change, and how did this change affect the results?

The components of THE rankings are weighted as follows: teaching, research, and citations each account for 30%, while international outlook is 7.5% and industry income is 2.5%. The more the papers are cited, the more importance is attributed to them, and this is assumed to be an indicator of the quality of research. Looking at the underlying measures for teaching and research, about half of each is attributed to "reputation" (15% for teaching and 18% for research). In other words, some scores were lifted by tens of thousands of researchers around the world who thought the research from certain universities in certain fields was good. They assign further scores to each specific factors, and the order of ranking is then determined by calculation. You can see that the weighting is higher for research, in particular the science research, which tends to have more papers published. When it comes to the humanities and social sciences though, the process is largely a survey of reputation, but how this is handled is really like a black box.

For reputation-based surveys like this, universities outside Europe and North America tend to be at a disadvantage. Instead of surveying reputations, ARWU judges the level of teaching and research based on the receipt of prestigious awards such as the Nobel Prize or the Fields Medal (from the International Congress of Mathematicians) awarded to active professors or university graduates. Meidai in 2015 and Tokyo Institute of Technology in 2017 saw their rankings rise significantly thanks to Nobel Prizes awarded the previous year. However, in both cases, awards were for academic achievements made much earlier, and in latter case in particular, Honorary Professor Emeritus Yoshinori Ohsumi had just transferred a few years previously to the Tokyo Institute of Technology, so one could not really say that the rankings would necessarily recognize today's capabilities of a university.

A major change with THE rankings in 2015 was with database of papers published, which serves as the basis for citation count. The key change was switching from a partnership with Thomson Reuters to one with Elsevier. The former reviewed about 12,000 influential publications, while the latter reviews about 22,000, including academic journals in Japanese and other non-English languages. While the overall number of papers counted increased, the data primarily covers English citations, inevitably, so it has been pointed out that non-English citations may be at a disadvantage. In addition, regional adjustments were previously done when compiling the number of citations, but this has now stopped, meaning that there is less consideration for countries outside of Europe and North America. Other papers were excluded as well, including those authored by a thousand or more contributors, such as large-scale experiments on elementary particles. Unsurprisingly, these changes attracted considerable criticism, so it was decided to include them again with some revision the following year.

Thus, due to a variety of changes like these, Japanese universities saw a big drop in citation scores, and their overall rankings apparently dropped since this component is heavily weighted at 30%. This big drop in citation scores occurred not only in Japan but was also significant in other non-English-speaking countries. Meidai could not escape this fate, with its ranking dropping from the 226-250 cohort the previous year to 301-350.

In fact, significant changes occurred in 2010 as well. For example, the number of criteria used for evaluation increased from six to 13 items, and the weighting of paper citations increased from 20% to 32.5%. That time as well, Japanese university rankings dropped significantly, with the number in the top 200 cut in half, from 11 to just five.

Incidentally, the latest QS World University Rankings as one of the "big three" placed five Japanese universities in the top 100 - the University of Tokyo, Kyoto University, Tokyo Institute of Technology, Osaka University, and Tohoku University - and Meidai came in at 116. There have been no major changes in QS criteria, and its Japanese university rankings as a whole have been either steady or increasing in recent years. Again, it is obvious that evaluations of the diverse activities of universities are a product of the measures being used, so it is essential to have some diversity in evaluation methodologies.

Yoshie Tsutsumi, a research administrator at Meidai's Academic Research & Industry-Academia-Government Collaboration Center mentioned above, was involved in the analysis. She says that "The important thing is not strategizing to increase the rankings, but rather, to use the rankings as indicators to boost our research capabilities, and that is what Meidai is aiming for." In other words, don't be obsessed with individual rankings.

As commissioner responsible for research at Meidai, Professor Emeritus Hideyo Kunieda, has been involved in university rankings and says that while he recognizes the inherent problems with rankings themselves, "as a baseline for intentionally trying to raise the quality of teaching and research at Meidai, we should use them and all the tools available." For example, as it is important to increase the number of students learning at universities outside Japan, he would aim to improve the relevant indicators, through fair and square initiatives to further enhance research, including efforts for graduate students to obtain joint degrees with top-ranked universities outside Japan. For that, he would like to build university relationships that enable joint research in the real sense. Recently, we hear about the need to increase jointly-authored papers, but in the context of such relationships, these papers would come quite naturally.

It is important to expand international activities of the university and make it visible to others. The Nagoya University Institute for Advanced Research was created in 2002 as a university-wide interdisciplinary academy at Meidai. It is active as a steering committee member of the University-Based Institutes of Advanced Study (UBIAS), an international organization that includes over 30 university-affiliated institutes for advanced research. Its annual meeting was hosted by Meidai at the end of November in 2017 and attracted top representatives from other countries. Hisanori Shinohara, Director of IAR, says that global networking through activities like these raises international awareness about Meidai in the long term, and this can have ripple effects for research and teaching as well.

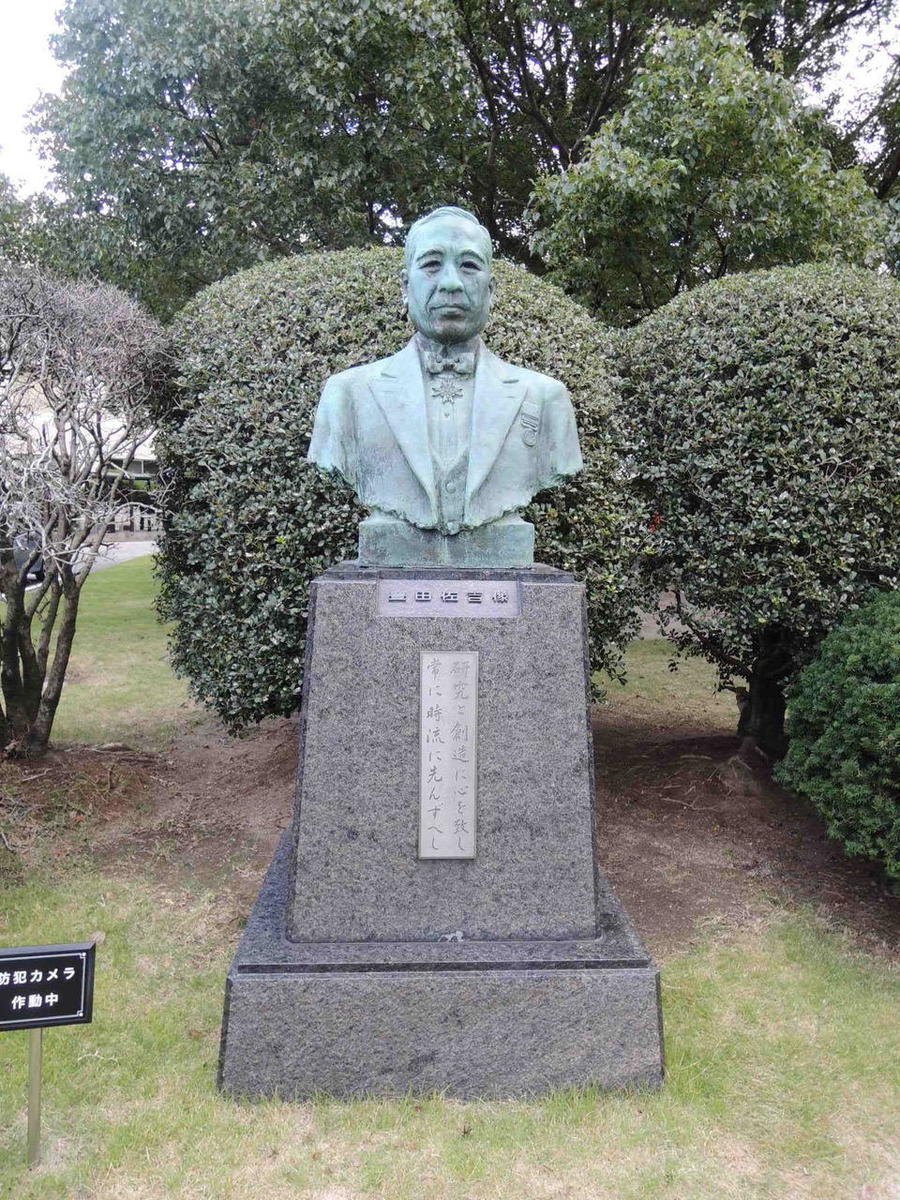

At Toyota Technological Institute, a bust of Sakichi Toyoda, who laid the foundations of the Toyota group of companies. With the motto of "Respect the spirit of research and creativity, and always strive to stay ahead of the times," TTI was launched in 19

At Toyota Technological Institute, a bust of Sakichi Toyoda, who laid the foundations of the Toyota group of companies. With the motto of "Respect the spirit of research and creativity, and always strive to stay ahead of the times," TTI was launched in 19

How do we avoid getting tripped up by rankings? Some universities have shown a smart approach to this. Consider for example the Toyota Technological Institute. This is a unique university where all first-year students of a faculty experience dormitory life, and that strives to produce a practical engineering education while also developing students' personal quality. TTI drew considerable attention when it appeared for the first time on the THE rankings in 2016, coming right after Meidai with a rank of 7th in Japan, and being in 351-400 cohort internationally. Its citation rating was especially high, and it seems that this helped boost the rankings. One of its sister schools is the Toyota Technological Institute at Chicago (TTIC), an institute in Chicago with strengths in computer sciences. Citations of papers from the TTIC may have boosted TTI as well. About this, Hiroyuki Sakaki, president of TTI, said, "We are pouring a lot of effort into teaching and this is producing results, but we still have more work to do in research." He does not want to be overly influenced by rankings, and decided not to provide data the following year. As a result, in 2017 the institute disappeared from the rankings. Going forward, he says that he would consider THE as one among multiple indicators, for example, by participating every few years.

Finally, I would like to introduce some research that makes us think about seeing ranking numbers rationally. This relates to papers published in China, a country that is making its presence known. China is in second place after the US in the number of papers published as well as citation count. Among the 3,000 authors of papers cited most frequently listed by Thomson Reuters, 115 researchers were from China, surpassing the 79 from Japan. As mentioned above, citations are weighted heavily in the THE university rankings, and this year, Peking University ranked 27th while Tsinghua University ranked 30th, surpassing Japanese universities in the overall rankings. In both cases, the number of citations also exceeded those of Japanese universities.

Everyone acknowledges that China is becoming prominent in research, but regarding the numbers about citations, are there some disparities in expert perceptions? With that question in mind, Yukihide Hayashi, a senior fellow with the Japan Science and Technology Agency, and his team investigated correlations between the level of citations and the quality of research. He asked Japanese experts for their assessments of researchers, and also looked up track records for receiving international awards such as the Nobel Prize and other international academic recognition. He found that 31 of the 79 Japanese researchers had received such recognition, compared to seven of the 115 Chinese researchers. He also found that only eight of the Chinese researchers were recognized by the experts he polled as top-level researchers internationally.

Based on such results, Hayashi concludes that a plentitude of citations may not necessarily mean high quality of research. Factors that might increase the number of citations could be that perhaps there is an emphasis on quantitative output in China, spurring researchers to write as many papers as possible and to cite each other, and perhaps this behavior is being encouraged, he says.

Hayashi recognizes the limitations of their studies that are not based on quantitative analysis, but still says it is important to see the numbers rationally.

University rankings are just rankings. But rankings are rankings nonetheless. Our only choice is to deal with them without getting too caught up in them. Professor Emeritus Fumitaka Sato, a physicist from Kyoto University, said since we cannot avoid these kinds of evaluations, we might as well use several. Rankings often tend to be done as a sort of business by companies and organizations in the world at large, and each has its own uniqueness and motives. The real question is how to evaluate the diverse research activities of universities, including those in the humanities and social sciences, and how to express all of this to the world? Some work is still needed there.

There is one more thing to mention. I alluded to this at the start, but the back story for the unimpressive rankings of Japanese universities is a long-term decline in the Japanese share of papers being published. This is directly connected to what is happening on the frontlines of university research. To deal with that, it will be important to squarely face the issues and address them. This is much more difficult than any measures targeting individual rankings, but more than anything else, that is what is needed.

Subscribe to RSS

Subscribe to RSS